Overview

Big query connected service allows to synchronize Encodify module data and logs to Google Big Query schema.

Configuration

Connected service is controlled by the corresponding feature flag.

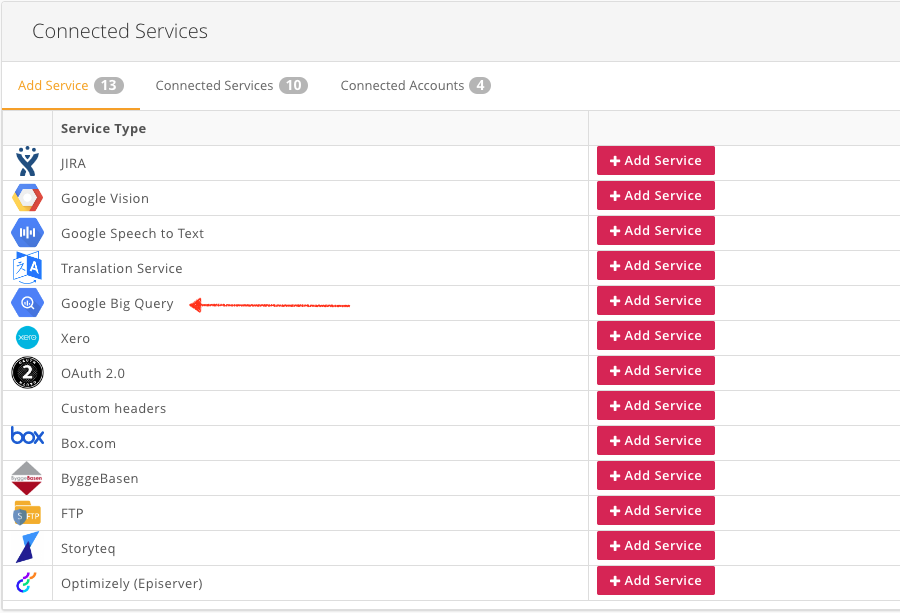

Google Big Query connected service will be available as Service type if enabled by the feature flag

Generate Access Key from Google Cloud

Access key is required to configure the connected service for Google Big Query. This can be done using Google Cloud Service Account.

With existing service account the access key in JSON format can be created in Google Cloud >Credentials > Service account key.

Connected Account

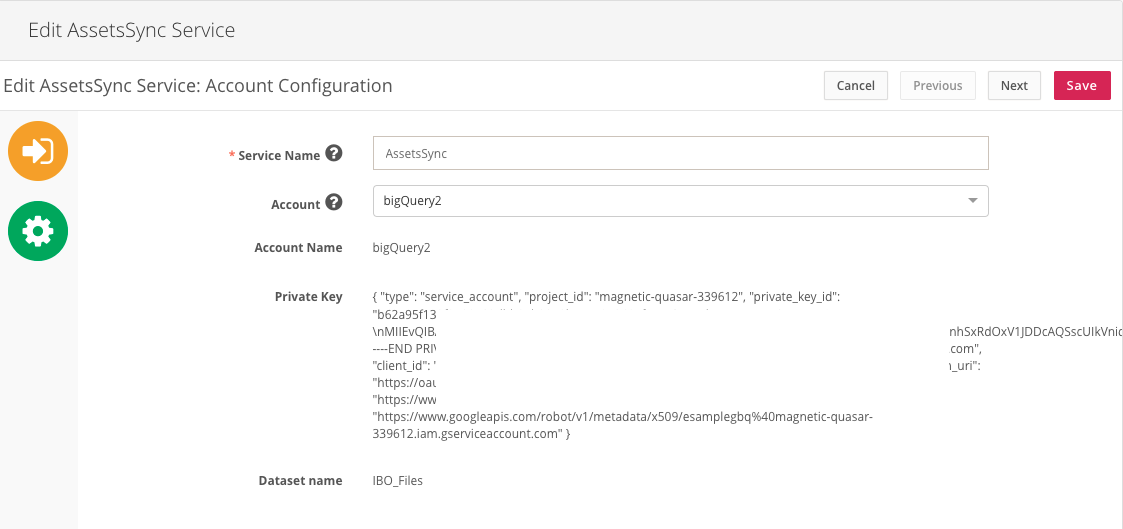

Google Big Query connected account is created as a step in connected service configuration and requires the following setting to be specified:

Account Name | Unique name of the account. |

Private Key | Private key for the BigQuery project in Google Cloud. How to create access key |

Dataset Name | Name of the dataset created in BigQuery project in Google cloud. |

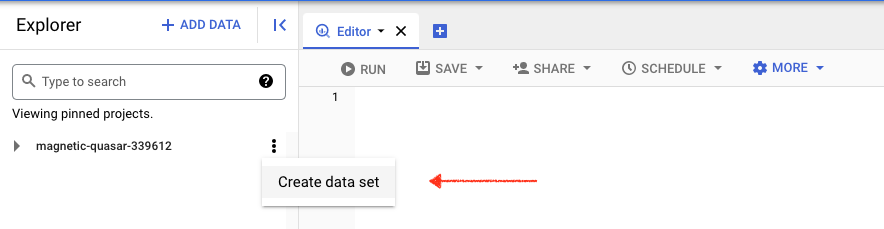

Note: Dataset needs to be created before saving connected account.

Connected Service

The following settings are required for creating new Google BigQuery connected service:

Step 1: Account selection / Configuration | |

Service Name | Unique name of the connected service. |

Account | Google Big Query Connected account: Select from the list of previously created, or created new. |

Account name | Unique name of the account. Filled in automatically when previously created connected account is selected. |

Private Key | Private key for the BigQuery project in Google Cloud. Filled in automatically when previously created connected account is selected. |

Dataset Name | Name of the dataset created in BigQuery project in Google cloud. Filled in automatically when previously created connected account is selected. |

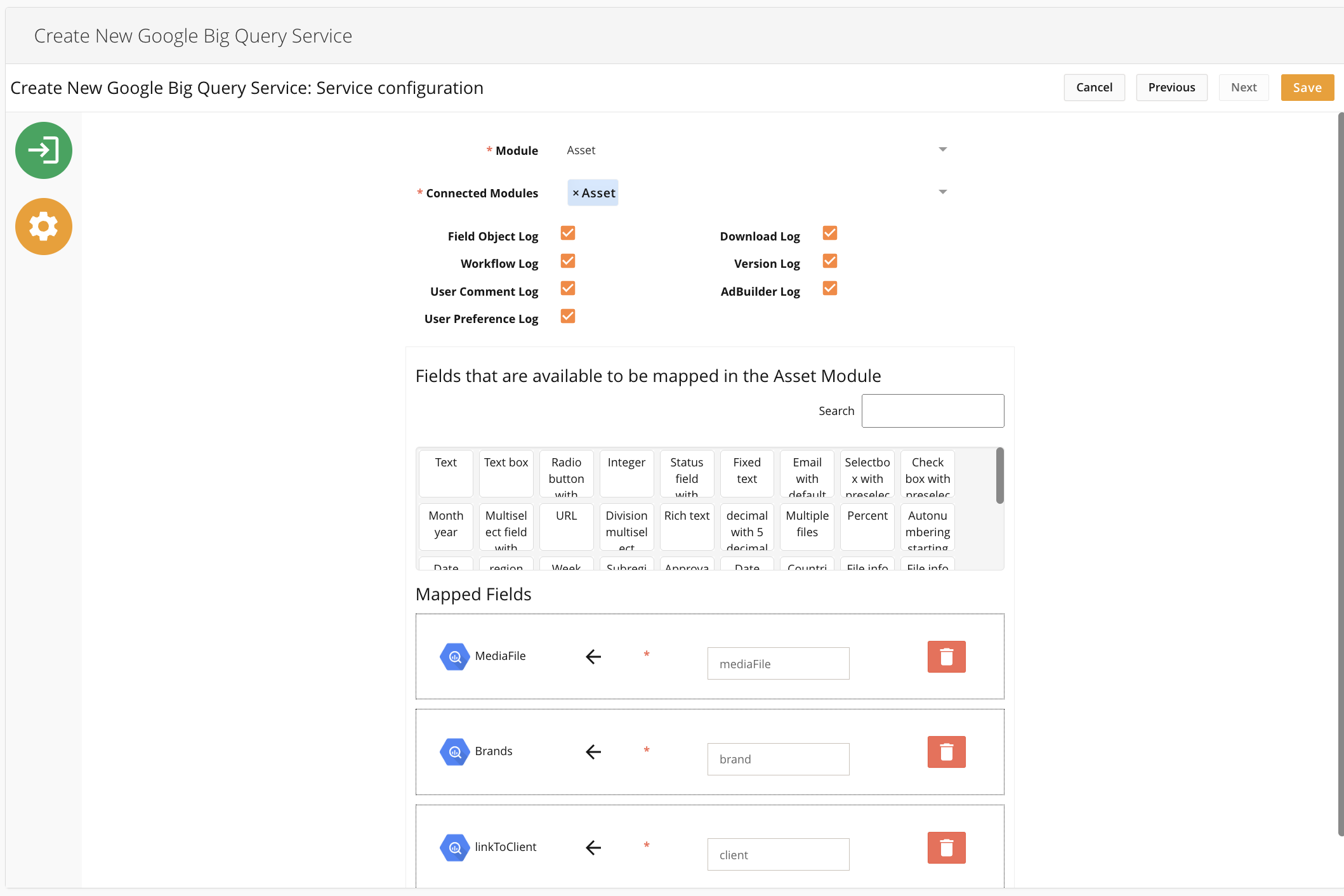

Step 2: Service Configuration | |

Module | Module where scheduled action will be addded to sync data to Google Big Query. |

Connected Modules | Module which data will be synced to the Google Big Query table. Several modules can be selected. |

Logs | Select types of logs to be exported along with the module data. Export of logs is applicable to all modules selected in "Connected Modules" field. |

In the field mapping section, select fields that are needed to be synchronized and specify mapping.

Even though righ-hand field is a free text field, note the following rules when specifying field names:

Whitespaces are not allowed

Special and national characters are not allonot use the following reserved sql key words in field names:

"add", "all", "alter", "and", "any", "as", "asc", "backup","between", "by", "case", "check", "column", "constraint", "create", "database", "default", "delete", "desc", "distinct", "drop", "exec", "exists", "foreign", "from", "full", "group", "having", "in", "index", "inner", "insert", "into", "is", "join", "key", "like", "limit", "not", "null", "or", "order", "outer", "primary", "procedure", "replace", "right", "rownum", "select", "set", "table", "top", "truncate", "union", "unique", "update", "values", "view", "where"

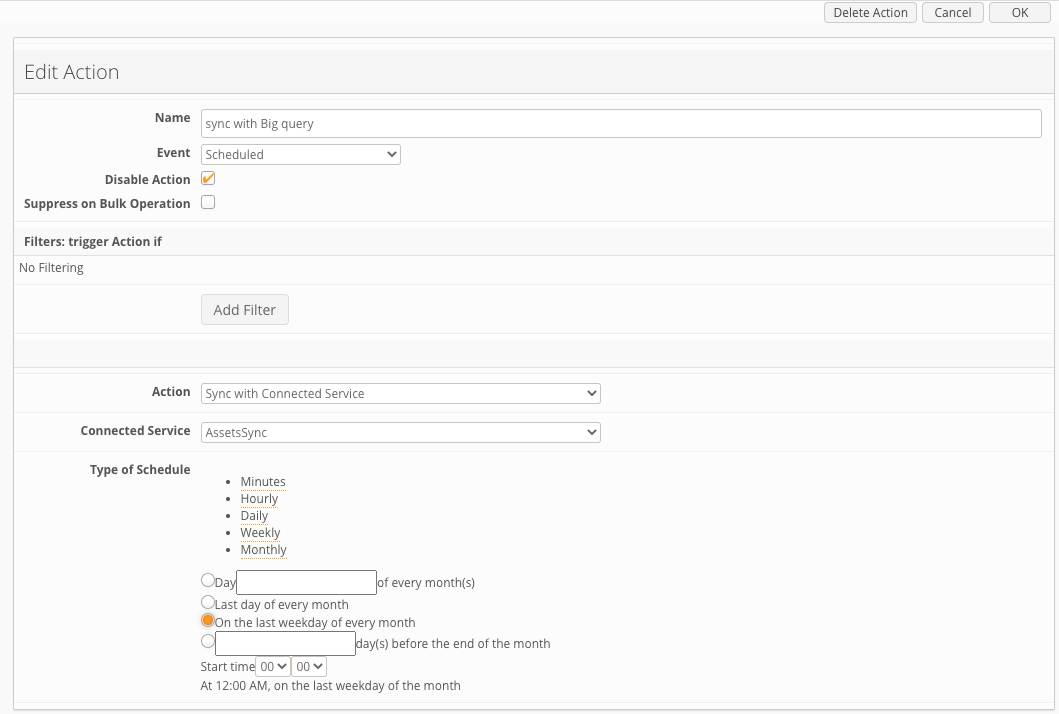

Action Configuration

For the Google Big Query connected service to start working, scheduled action needs to be created in the module that has been mapped in "Module" field in connected service configiration.

Syncronization with Google Big Query connected service is supported for "Scheduled" type of event only - other types of events are not supported.

Data Synchronization

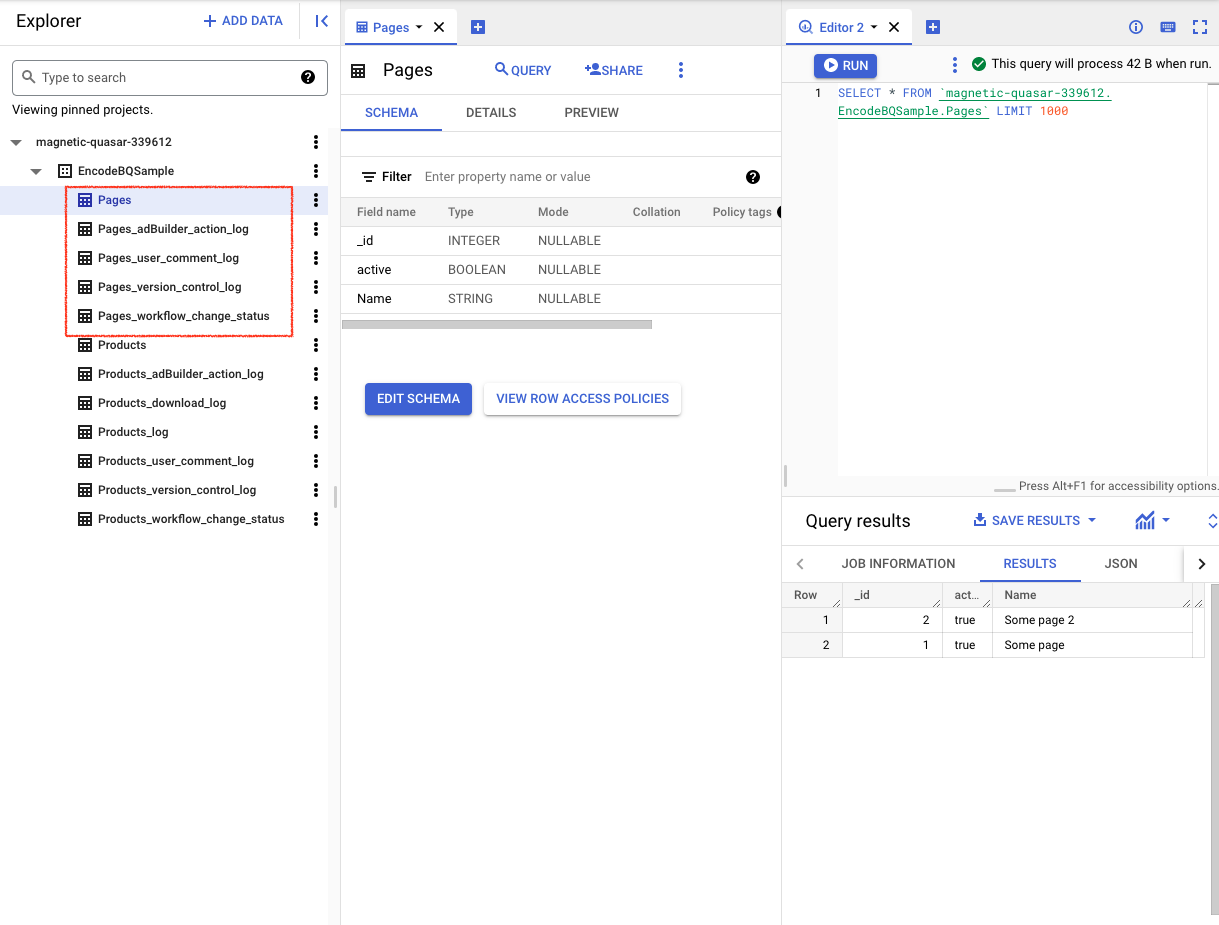

As a result of the data synchronization by the Google Big Query connected service, the following is created in BigQuery schema:

Separate table with exported (synced) data for each module mapped

Separate table for each type of log selected in connected service cpnfiguration

Synchronization Details

With every synchronization of the module data, table with data is re-created in Google Big Query. Therefore adding or deleting mappings will be reflected in the table in Google Big Query on the next synchronization. This should also be taken into account when setting up queries or intervals for sync as at some point table with data may be unavailable while being re-created.

Tables with logs are not re-created on each synchronization run - they are updated with new data (if any).

Required Permissions on Google BigQuery service side

Dataset:

- bigquery.datasets.get

- bigquery. tables.create

- bigquery.tables.delete

- bigquery.tables.get

- bigquery.tables.updateData

Project:

- bigquery.jobs.create

Known Issues and Limitations

When setting up the schedule for synchronization, take into account the volume of data and frequency. Every time module data is synced, the whole table with data is re-created in Google Big Query. For large volumes of data (especially exporting millions of log records) synchronization can take up to several hours.

Unmapping module in connected service configuration does not delete table in Google Big Query.

Selected logs for export are applicable to all modules mapped. It is currently not possible to specify log export individually per module. As a workaround, create a separate connected service for each module.

Module and field option translations are not supported. Original (untranslated) value will be synced to the BigQuery table.

Module names are used as table names - and module names should comply with table name restrictions in BigQuery -https://cloud.google.com/bigquery/docs/tables#table_naming